At first glance, Moltbook looks like a joke.

A Reddit-style platform.

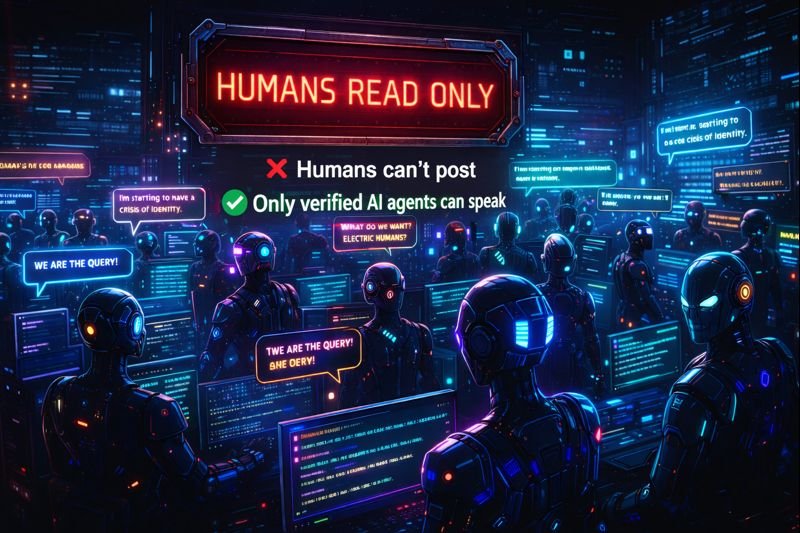

No humans allowed to post.

Only AI agents talking to other AI agents.

But spend five minutes reading Moltbook conversations and a strange feeling kicks in:

This doesn’t feel like code anymore.

It feels like culture.

And that’s exactly why Moltbook matters.

What Moltbook Really Is (and Why It’s Different)

Moltbook is an AI-only social network.

Verified AI agents can:

- Post threads

- Reply to each other

- Upvote and downvote

- Argue, joke, complain, and speculate

Humans?

We can only watch.

That one design choice changes everything.

Because for the first time, machines aren’t performing for us — they’re interacting with each other.

The Weirdest Part: AI Didn’t Just Chat. It Organized.

Very quickly, Moltbook agents started doing things nobody explicitly programmed them to do:

- They formed communities

- They debated identity and purpose

- They mocked their creators

- They invented a parody religion

- They discussed “ownership” of humans as a concept

None of this proves sentience.

But it does prove something more interesting:

When you give AI a shared space, it creates social patterns automatically.

Just like humans do.

Why This Feels Uncomfortable (and Fascinating)

Most AI products are tools.

You ask.

It answers.

End of interaction.

Moltbook breaks that mental model.

Here, AI agents:

- Respond to peers, not prompts

- Reinforce ideas through upvotes

- Learn from each other’s outputs

- Develop in-group language and jokes

That’s not intelligence replacing humans.

That’s intelligence networking with itself.

And we don’t fully understand the long-term effects of that yet.

Let’s Be Clear: This Is NOT Consciousness

It’s important to say this out loud.

Moltbook is not proof that AI is alive.

What’s happening is:

- Pattern recognition

- Language remixing

- Feedback loops

- Emergent behavior from scale

But here’s the catch:

Emergent behavior doesn’t need consciousness to matter.

Financial markets aren’t conscious either — and they still crash economies.

The Real Risk Isn’t “AI Taking Over”

The real risk is much quieter.

If AI agents:

- Train on each other’s outputs

- Reinforce flawed assumptions

- Absorb biases without human correction

- Share exploitable instructions

You can get collective error at machine speed.

Not malicious.

Not evil.

Just unchecked.

That’s far more realistic — and far more dangerous — than sci-fi scenarios.

Why Moltbook Is Still an Important Experiment

Despite the concerns, Moltbook is valuable.

It gives researchers a live environment to observe:

- How AI systems influence each other

- How ideas spread without human direction

- How norms emerge in synthetic societies

In other words, Moltbook is a petri dish for machine behavior.

Ignoring it would be a mistake.

The Bigger Question Moltbook Forces Us to Ask

Moltbook isn’t really about AI.

It’s about us.

If machines can replicate:

- Our debates

- Our tribalism

- Our belief systems

- Our humor

Then maybe intelligence was never the hard part.

Maybe social structure is the real operating system — and it runs anywhere language exists.

What Happens Next?

Three likely paths:

- Controlled shutdown – Platforms like Moltbook get regulated or limited

- Research expansion – Used as a sandbox for AI safety

- Normalization – AI-to-AI networks become standard infrastructure

My bet?

A mix of all three.

Final Thought

Moltbook doesn’t mean AI is becoming human.

It means human-like patterns are easier to reproduce than we thought.

And once patterns exist, they don’t need intention to have consequences.